Be honest: have you ever visited ChatGPT, ChatSonic or a similar website to assist with your homework assignment? The capabilities of such sites surely make such consultations tempting.

For over a year now, the world as we know it has drastically changed in various aspects thanks to a phenomenon known as Artificial Intelligence or AI. AI is commonly a behind-the-scenes algorithm used in major platforms and software to cater to user experience and convenience. Think about your phone keyboard suggesting words as you type a text message – that is AI.

Artificial Intelligence in itself is now available for general public use by everyday Internet users. It is able to hold human-like conversations, provide accurate answers to academic questions within the span of a few seconds, and research complex topics proposed by user input, among numerous other awe-striking capabilities.

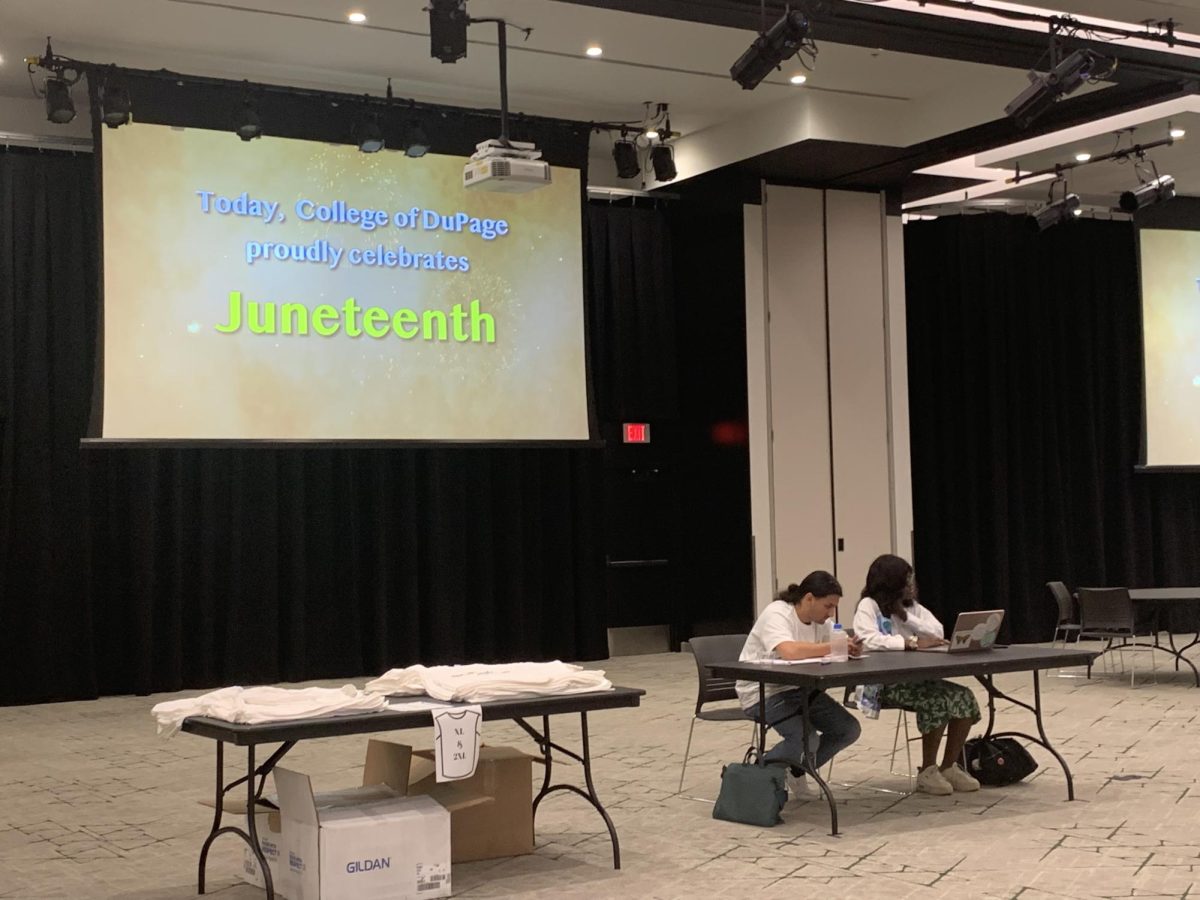

Unsurprisingly, students of all ages are taking advantage of these AI websites to help with their education. But when is help from AI too much help? College of DuPage Student Conduct Officer Assia Baker shared information regarding the sentiments of the college’s Academic Conduct Committee on Artificial Intelligence, which was established in 2015 upon faculty request as a way of ensuring the maintenance of academic integrity on campus.

“If used correctly and if used with integrity, our students can benefit from it,” Baker said. “But if your faculty does prohibit you from using AI, then we support them in that regard. We want our students to produce their own work.”

College of Dupage psychology student Sabrina Ali is among those who use Artificial Intelligence, particularly for help with grammar, citations and brainstorming ideas. She appreciates the short-term convenience it serves to users, yet fears its long-term implications.

“As a student, I feel positive about AI because AI can help me brainstorm ideas and answer questions quickly and accurately,” she said. “From other perspectives [i.e. replacing jobs], I don’t really like AI. The benefits of AI are that it can quickly answer questions and can help people get inspired. I find myself using AI for school frequently, so around 3 instances in a week.”

College of DuPage English Professor Eric Tan shares similar beliefs.

“Primarily in English, the rise of ChatGPT and other chatbots is obviously going to be a primary hurdle [to learning] going forward,” Tan said. “As someone who’s used ChatGPT myself and has a bit of a rudimentary understanding of how it operates and works, I can definitely say that it is an extremely helpful piece of technology but also can be somewhat terrifying knowing its capabilities.”

According to Tan, nearly a quarter of his own students have familiarity or experience with ChatGPT, which is currently one of the most popular AI chatbots.

“From what I have gathered just from my own initial surveys in my classes, I would probably say that 25% of the students are aware of what ChatGPT is, and then maybe 15 to 20% have used it,” he reported.

“Whereas the majority of my students still are either unfamiliar with it or have not been introduced to it. So I have not seen anything extensive in terms of the output that the program has provided.”

Tan suggested Artificial Intelligence could possibly be used to enhance learning rather than take away from it.

“One of the things that I try to do when implementing ChatGPT or that sort of technology is first and foremost acknowledging it, doing a sort of introductory walkthrough with students to show them the capabilities of ChatGPT, how it can be helpful to sort of be the idea generator, but it shouldn’t be a replacement for your own ideas and your own input and your own research,” Tan explained. “And then also addressing some of the limits that ChatGPT may have currently, and then finally going into the potential of where ChatGPT can go and how we can sort of harmonize a classroom with what we do on a regular basis, and then what ChatGPT can add to the overall [learning] experience.”

Ali finds that Artificial Intelligence helps to clarify concepts that initially seem vague or unclear. She details her steps in interacting with AI chatbots.

“I was writing a paper for ethics,” she recalled. “I wanted to insert a quote, but it did not flow smoothly. I remembered you could use square brackets to change the words in a quote, but I was not exactly sure how to do that. So I asked ChatGPT how to use square brackets for in-text citations. My steps for using AI is to come up with a question and then ask AI. AI is like Google. I use it to get ideas, clarify concepts I am unsure about, and assist with grammar. AI just tends to be more specific and quicker than Google.”

The use of Artificial Intelligence to complete an essay or other assignment on behalf of someone else can amount to plagiarism on account of the mechanics AI operates on. Artificial Intelligence generates its answers and responses by gathering information and data from several sources across the Internet, which are contributed by other people. This means that, when a student is using AI to create work to pass off as their own later on, they are actually utilizing the work and information of other people without credit. Such behavior is deemed plagiarism, according to College of DuPage’s Code of Academic Conduct. When AI is used to directly cheat, it can result in severe consequences at higher education institutions, including College of DuPage, such as failing entire classes, suspension and even expulsion.

Tan believes the use and prevalence of Artificial Intelligence may depend on the particular class or subject.

“I’m able to recognize or observe when a student’s work on a larger scale doesn’t match their work that they do on a daily basis,” Tan said. “That’s going to raise red flags, whereas someone in a science or philosophy class, where writing isn’t a regular part of the curriculum, might be able to get away with it more frequently because the teacher or instructor doesn’t consistently see their work. As a result, they might not have a clear understanding of the students’ capabilities, limitations, or knowledge.”

Ali confirms Tan’s stance on varying instructor perspectives on Artificial Intelligence based on experience.

“Professors say not to use AI to write our papers,” she recalls. “My social psychology professor said we can use AI as a tool for idea inspiration and perhaps grammar. My ethics professor said if we used AI to write the paper, we get a zero.”

This new age of Artificial Intelligence also poses a danger to honest, well-intentioned students who are prone to being falsely accused of using AI in their work. According to a September 2023 study conducted by Drexel University, software that brands itself as being able to detect the use of AI in student work is not always accurate. It can result in diligent students being wrongly accused of using AI, even risking facing severe consequences under the pretext of “cheating” or “plagiarism” that had never taken place. Students in technology-based courses such as computer science are especially prone to this, as AI is able to provide information on programming and other technological functions, and it may be difficult for students learning such material to provide solid evidence of their academic integrity.

Ali believes that due to the advanced writing techniques AI chatbots tend to have, it is unlikely that she would face such an accusation anytime soon.

“I am not really worried about [being wrongly accused of using AI],” she said. “Because my writing style is not like an AI’s.

Baker advises COD students to utilize AI with an active conscience.

“If you’re using [AI] to create a study guide, if you’re using it to create help aids, then that’s great because ultimately you are learning the material and you’re able to do the work,” Baker says. “But if you’re using it as an alternative method to not do the work, then I would advise against it.”

Ali agrees with Baker’s stance on AI.

“Students should use AI responsibly,” she said. That means no copying and pasting, and having AI do assignments for you. There should be consequences for students who cheat using AI.”

Tan encourages educators to maintain class practices that Artificial Intelligence can not emulate or substitute as a way of counteracting student dependence on AI.

“Student engagement, class discussion, project development, soft skills; those are things that college should be about,” Tan said. “And if we start to overemphasize the use of different technology where that becomes the underlying foundation of a class, then you put yourself in a position where that is going to be the foundation of the class. But if your teaching and your method and your pedagogy and your approach are at the core of your class, then everything else is just secondary, and everything else is just the tool that you can use to enhance the class.”

Students can learn more about COD’s policies on the use of AI by visiting the COD Library’s AI, Digital Literacy and Education webpage.